ek$iEdgeDump

The Problem: Extracting links (connections) from the existing “heap” of entries.

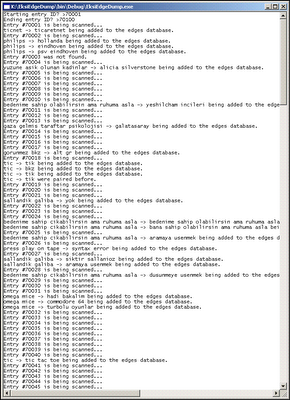

Design: For ek$iVista to be able to function, to be able to produce a digraph, it needs a list of directed edges, and ek$iEdgeDump was produced for this purpose. For the sake of simplicity, like ek$iDump, it is also designed as a console application. During the development, two versions of ek$iEdgeDump were produced. The first version gets the full list of titles in the database (a table named Titles exists in the database) and scans them one by one. In this scan, the entries under the title being scanned are inspected and any link that points to a title (those that point to single entries are omitted) is parsed out of the entry text. Then, another database query checks whether the title pointed by the link exists in the title list. If it exists, the pair consisting of the IDs of the source title and the destination title (the records in the Titles table have a title ID and title name) are written to a table named EdgeData, only to be used by ek$iVista in drawing the digraph. This approach proved to be too slow, because for every link found in an entry, a verification query has to be made. The scan rate of this version of ek$iEdgeDump was less than 1,000 titles/day. Given the fact that the database contained more than 700,000 titles, the job would be completed in nearly two years. Clearly, another approach had to be adopted .

In the second version of ek$iEdgeDump, the focus is back on the entries instead of the titles. As one can recall from the description of the Entry class in ek$iAPI, one of the details of acquired from Ekşi Sözlük when an entry is extracted is the title the entry is placed under. Thus, we can produce a different table that looks like the EdgeData table described above that keeps information of the source and the destination vertices of the directed edge. The table, in the new approach, is produced by scanning the entries in the database (they reside in a table named Entries), parsing out the links that point to titles and writing the pair consisting from the name of the source title and the destination title to a table named EksiEdgeData without checking whether the destination title exists in the Titles table. This verification effort was the factor that slowed the first version down, and it can be handled without querying the database by ek$iVista (the details of how this is done are given in the section discussing ek$iVista). As the title data in the Entries table is stored in string format (not as integers; foreign keys related to title ID column in Titles table), the size of the EksiEdgeData table is significantly larger than that of EdgeData. ek$iVista uses the data from EksiEdgeData table, generated by the last version of ek$iEdgeDump.

23.06.2006

comp 491: report | ek$iEdgeDump

yazan eden: egiboy

yıldız tarihi:

23.6.06

0

yorum

![]()

? bitirme projesi, ek$i sozluk, ek$iAPI, ek$iDump, ek$iEdgeDump, ek$iVista, programlama, rapor, teknik

comp 491: report | ek$iDump

ek$iDump

The Problem: Coming up with a portable application to acquire Ekşi Sözlük entries and store them in a database.

Design: Although the final product of the project will be a graph depicting connections between Ekşi Sözlük titles, the connections arise from the content of the titles, which are, obviously, the entries. That is one of the reasons why ek$iDump is a tool for getting the entries rather than the titles. Another and maybe the prime reason for focusing on entries is that entries have unique integer IDs that allow them to be acquired one by one in a for-loop or a while-loop.

ek$iDump is, due to this nature of Ekşi Sözlük entries, at the level of complexity of a “Hello World” program. The program, designed as a console application, gets the starting ID and the terminal ID as its input, which are integer values. In a while-loop, beginning from the starting ID, if the entry with the given ID exists, it gets it from Ekşi Sözlük by calling Entry.GetFromEksi(ID) and writes the details of the Entry acquired to the database. The database of choice is a Microsoft Access file, because one does not have to set up a server for using it; even if you do not have Microsoft Access installed, one can obtain and install a package named Office 2003 Redistributable Primary Interop Assemblies and get on with using the database. Also, the data accumulated in the database is easily exportable to Microsoft SQL Server, which the other two sections of the project, ek$iEdgeDump and ek$iVista use. One always has to make the quantum leap from Microsoft Access to another pro-level RDBMS at some level, as Microsoft Access imposes a size limit of 2 GB on a database file. Note that although it is by no means final, the size of the database file generated in the course of the project exceeds 5 GB. An image showing ek$iDump in action is given in the figure below:

yazan eden: egiboy

yıldız tarihi:

23.6.06

0

yorum

![]()

? bitirme projesi, ek$i sozluk, ek$iAPI, ek$iDump, ek$iEdgeDump, ek$iVista, programlama, rapor, teknik

comp 491: report | project divisions

Project Divisions

Ekşi Sözlük graph visualization project consists of four distinct applications:

- ek$iAPI: Class library (basically, a Windows DLL) for acquiring, manipulating and organizing Ekşi Sözlük data, coded in C#

- ek$iDump: A console application coded in C# which exploits ek$iAPI to retrieve entries from Ekşi Sözlük and “dump” them into an MS Access Database

- ek$iEdgeDump: A console application coded in C# which processes the entries acquired by ek$iDump and finds links between titles

- ek$iVista: The final application that outputs an image file depicting the connections between titles in Ekşi Sözlük using the data generated by ek$iEdgeDump.

yazan eden: egiboy

yıldız tarihi:

23.6.06

0

yorum

![]()

? bitirme projesi, ek$i sozluk, ek$iAPI, ek$iDump, ek$iEdgeDump, ek$iVista, programlama, rapor, teknik